Main Menu

- Home

- Product Finder

- Calibration Systems

- Calibration Services

- Digital Sensing

- Industrial Vibration Calibration

- Modal and Vibration Testing

- Non-Destructive Testing

- Sound & Vibration Rental Program

- Learn

- About Us

- Contact Us

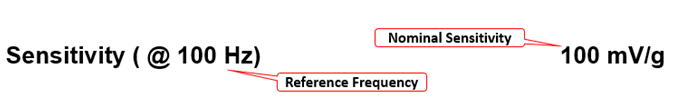

In the article Percentage Difference vs Deviation in Calibration, we touched briefly on the role of reference frequency in frequency response. In accelerometer calibration, we often note that 100 Hz or 159 Hz is typically used as the reference frequency. The first common question is: “Why are 100 and 159 used instead of something ‘simple’ like 1 Hz or 1000 Hz?”

One of the reasons metrologists/manufacturers prefer reference frequencies in the 100-159 Hz range is that this range falls into the region of flat response for most sensors: not too low or too high of a frequency. The ideal reference frequency is the point in the calibration with the lowest uncertainty and the response is flat. On either the high or the low end of a sensor’s frequency response, the uncertainty increases. At low frequency, uncertainty contributors such as the triboelectric effect increase due to greater cable movement at large displacements, and higher relative effect due to decreased excitation level. At high frequency, influences such as the senor’s own dynamics (connector resonances for example), or the uncertainty influence from the calibration hardware, can also increase with frequency as is the case with shaker transverse motion. Having low uncertainty at reference frequency allows for the tighter nominal sensitivity and frequency response specifications.

Given that we have settled on using a mid-frequency for our reference, and the commonly used frequencies are 100 and 159 Hz, the second common question that follows is: “What reference frequency should I use when calibrating my sensor, 100 Hz or 159 Hz?” Luckily, this question has a simple answer: Refer to the manufacturer’s specification sheet, and if not mentioned refer to the manufacturer directly for details. Manufacturers typically specify at what frequency a nominal sensitivity tolerance is valid. This frequency should be used as the calibration’s reference frequency.

In the United States, 100 Hz is typically used, as it is a round number with low calibration uncertainty. In some instances, such as with seismic sensors (where a sensor has high output and a limited frequency range), reference frequency is specified at 10 Hz. In Europe, 159 Hz is typically used.

This brings us to our final common question: “Why 159 Hz? In Europe, line frequency is 50 Hz as opposed to 60 Hz in the US. Since 100 Hz is a harmonic of the 50 Hz line frequency, Europe adapted a reference frequency to avoid measurement issues at line power frequency. The reference frequency of 159 Hz, or more specifically, 159.2 Hz, also is associated with an interesting phenomenon. In the conversion between acceleration velocity and displacement, the equations can be simplified down to the following:

A=?^2*X and V=?*X

Where

X=Displacement

V=Velocity

A=Acceleration

?=angular frequency=2Πf

When the frequency is equal to 159.2, the angular frequency (2Πf) is equal to 1000, which means that conversions between displacement to velocity or velocity to acceleration can be easily accomplished by multiplying each successive step by 1000.